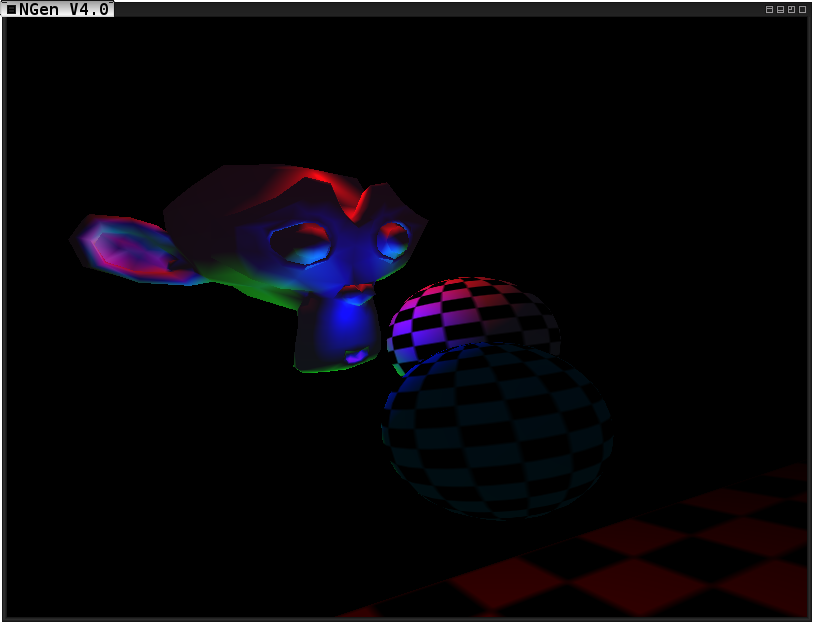

As the semester continues, so does the development of my Ray Tracer for my Global Illumination Class at Rochester Institute of Technology. As planned, I have been pursuing the development of a real time ray tracer to be implemented into my game engine, NGen. In order to make a real time ray tracer feasible, I will be beginning with a deferred rendering geometry pass, collecting information about the models projected onto the viewing plane in various textures. This information includes the world position, world normal, and diffuse color of the surface in each fragment. I will then use this information to cast the second set of rays for reflection, refraction, and shadows. The casts will incorporate spatial partitioning data structures as well as OpenCL as needed in order to maintain an acceptable frame rate. The results of these casts will then be stored in textures which will be sent to the lighting pass(es) of the deferred rendering pipeline.

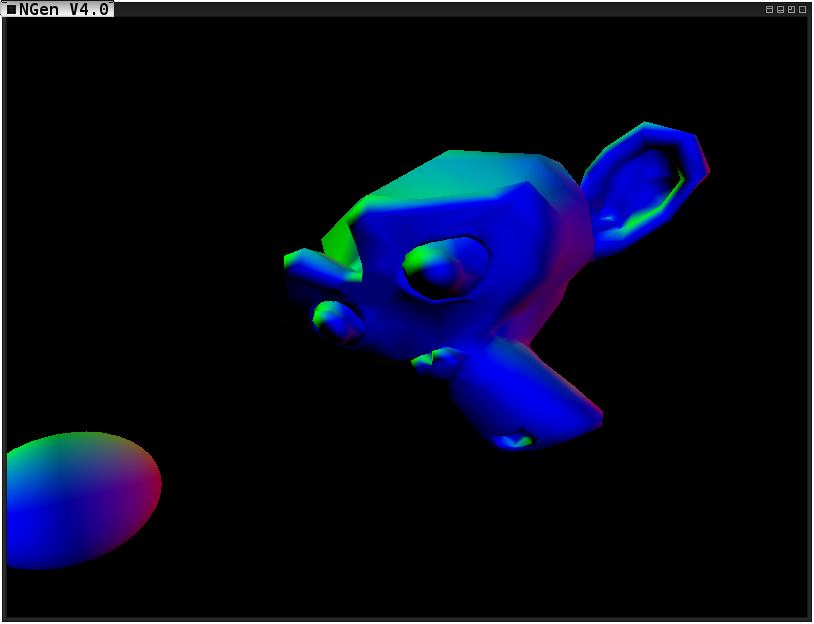

This checkpoint contains the Ray Tracing framework which has the ability to cast rays in a given direction from an origin to determine what object they hit, the first point of intersection, the normalized minimum translation vector depicting the direction of smallest translation in order to decouple the intersecting objects, and the magnitude of overlap along this minimum translation vector. This checkpoint also contains the deferred rendering pipeline which I will be using to generate the images and effectively skip the first set of ray casts.

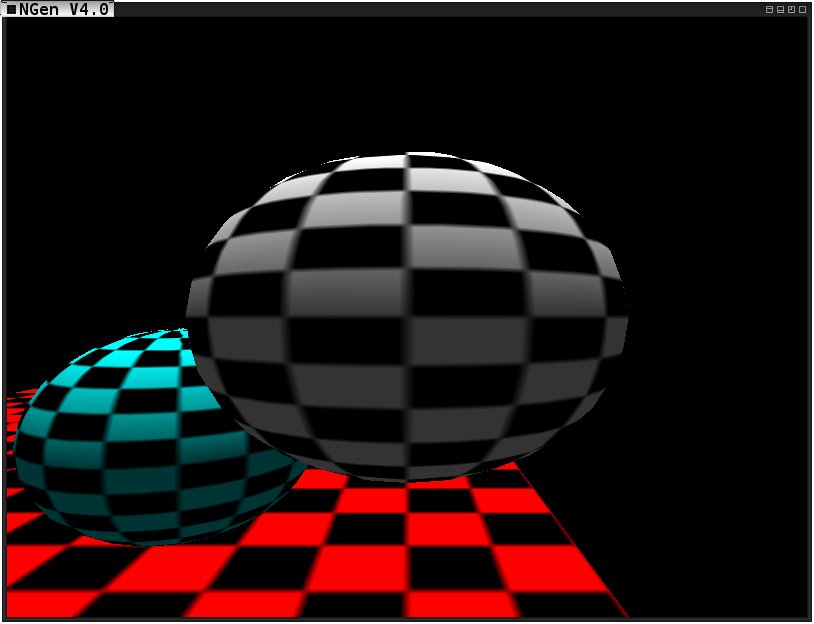

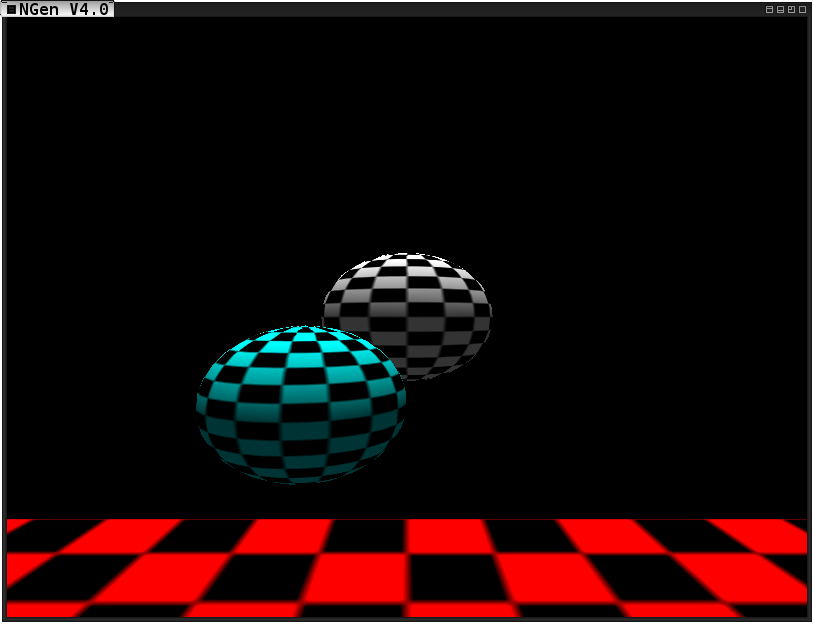

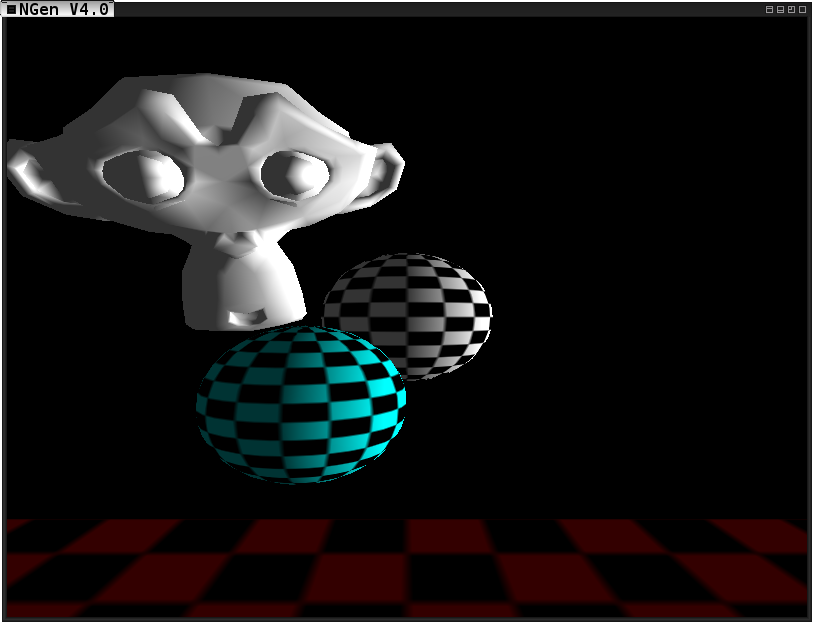

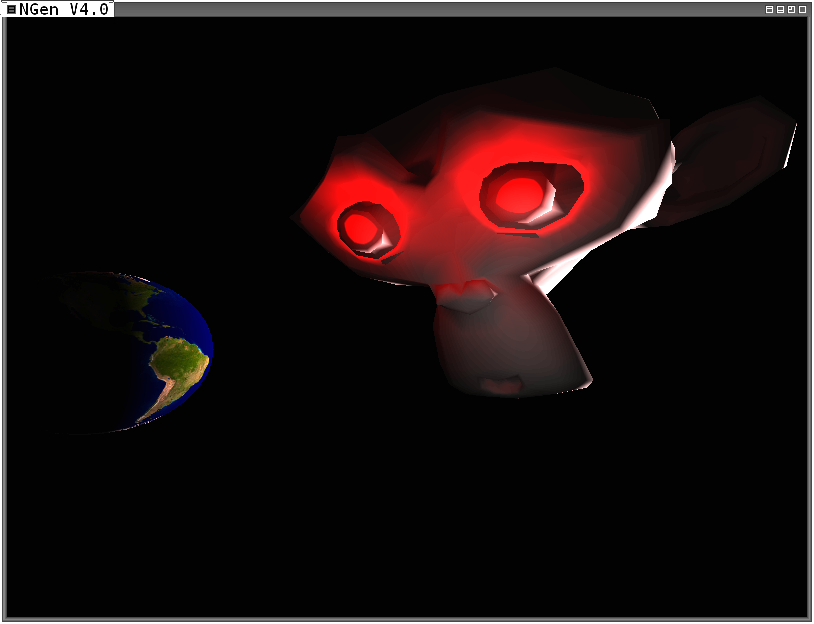

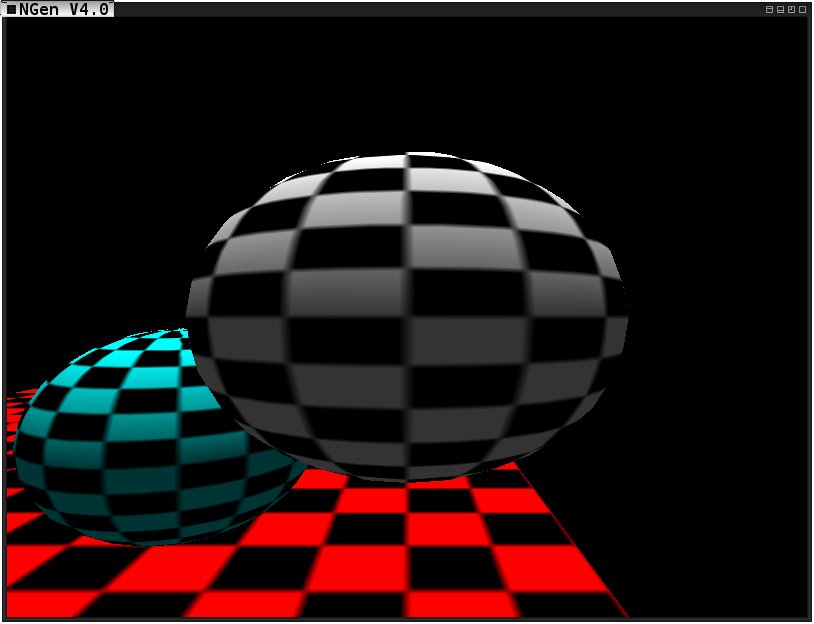

Below are some images either depicting progress or depicting me having fun doing what I do.